GlusterFS

Josip Maslać, nabava.net

Problem

Starting point

Tipical use case

user upload

New configuration

Taking advantage of the situation

Problem

Problem

Requirements

transparent to applications

setup and management - as "simple" as possible

possible solutions:

lsync ("configured" rsync)

DRBD (2-node only)

Ceph (looks complicated)

Solution

GlusterFS

open source distributed storage

scale-out network-attached storage file system

purchased by Red Hat in 2011

licence GNU GPL v3

Main use cases

storing & accessing LARGE amounts of data (think PB’s)

ensuring High Availability (file replication)

"backup"

Info

software only

in userspace

runs on commodity hardware

heterogeneous hosts (xfs, ext4, btrfs..)

file systems that support extended atribute (xattrs)

client-server

no external metadata

External metadata

no external metadata

Building blocks

Servers/nodes ⇒ trusted storage pool (cluster)

Bricks

actual storage on disks

mkdir /bricks/brick1

Volumes

clients access data through volumes

by mounting volume to local folder

Building blocks

Volume types

Distributed (default)

Distributed volume

Elastic hashing

Volume types

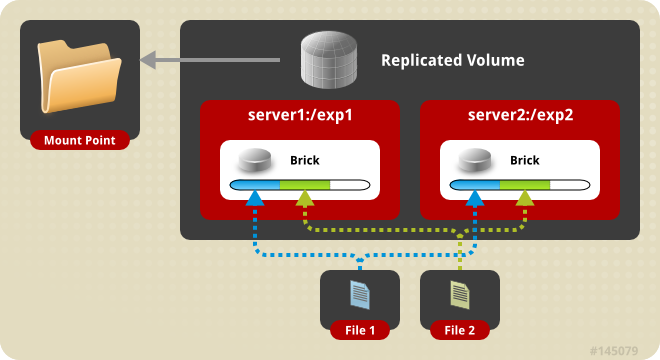

Replicated

synchronous replication!

high availability, self-healing

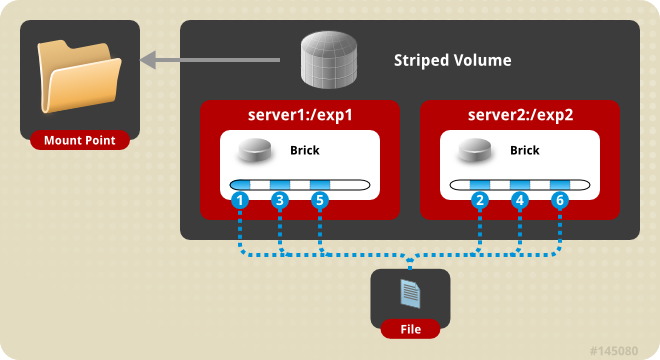

Volume types

Stripped

Demo

we are building:

2 nodes: srv1 & srv2

replicated volume

Building blocks

Servers/nodes

Bricks

Volumes

Nodes setup

# srv1 & srv2

apt-get install glusterfs-server

service glusterfs-server start# srv1

gluster peer probe srv2# srv1

gluster poll list

--

UUID Hostname State

0f5cfce3-d6ca-4887-afa1-e9465f148ff7 srv2 Connected

69091d19-aa4f-48be-a6a3-0661689cdde7 localhost ConnectedGluster console

gluster

> peer probe srv2

> poll list

> ...Bricks

# srv1

mkdir -p /bricks/brick-srv1

# srv2

mkdir -p /bricks/brick-srv2After this you DO NOT tamper with these folders!

…if necessary only in read mode

Volume

# srv1 (or srv2)

gluster volume create test-volume replica 2 transport tcp \

srv1:/bricks/brick-srv1 srv2:/bricks/brick-srv2 forcegluster volume start test-volumeVolume

accessing files

# client

mkdir -p /volumes/test# manual mount

mount -t glusterfs srv1:/test-volume /volumes/test# fstab entry

srv1:/test-volume /volumes/test glusterfs defaults,_netdev,acl 0 0# THAT IS IT!echo "123" > /volumes/test/file123.txt

ls /volumes/test/Volume info

gluster volume list

--

test-volumegluster volume info

--

Volume Name: test-volume

Type: Replicate

Volume ID: ece85c0a-e86c-44e1-8cc9-5ab2f2d697c0

Status: Started

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: srv1:/bricks/brick-srv1

Brick2: srv2:/bricks/brick-srv2

Options Reconfigured:

performance.readdir-ahead: onUseful commands

gluster volume add-brick

gluster volume remove-brickgluster volume set <VOLNAME> <KEY> <VALUE>

gluster volume set vol-name auth.allow CLIENT_IPgluster volume profile

gluster volume topgluster snapshot create...Replicated volume gotcha

network latency

Conclusion

GlusterFS

scalable

affordable

flexible

easy to use storage

The end

Questions?

Contact info

twitter.com/josipmaslac